If you are using docker-compose for Django projects with celery workers, I can feel your frustration, and here is a possible solution to that problem.

Celery provided auto-reload support until version 3.1, but discontinued because they were facing some issues and having a hard time implementing this feature as you can read on this GitHub issue. So now we are undoubtedly on our own.

Docker provides functionality called volumes and so it has provided us a way to use ‘python manage.py runserver’ command, so we don’t have to restart the server every time. The following is the common way to do it-

web:

build: .

restart: always

env_file:

- .env

entrypoint: ./docker-entrypoint-local.sh

volumes:

- .:/code

ports:

- "8082:8000"

links:

- postgres

- rabbitmq

As you can see, I have used volumes to sync my current directory with the code directory of the container. It will use the same code and in entrypoint file is as follows.

#!/bin/sh

./wait-for-it.sh db:5432

python3 manage.py makemigrations

python3 manage.py migrate

python3 my_celery_project/setup_db.py

python3 manage.py collectstatic --noinput

python3 manage.py runserver 0.0.0.0:8000

So now the code is in continuous sync and now we can use the runserver command to make our development process faster, and we can also use this same trick to auto-reload the celery worker. You can see the runserver command file on GitHub.

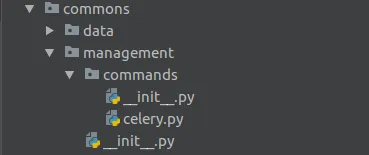

Django also provides functionality to create management commands for our apps. To create a command, You will need to create a management package with a commands package inside it. Make sure you add this folder to the registered Django app.

Now, You will need to create a celery.py file in the commands package as you can see in the image. copy-paste the following code.

import shlex

import subprocess

from django.core.management.base import BaseCommand

from django.utils import autoreload

def restart_celery(*args, **kwargs):

kill_worker_cmd = 'pkill -9 celery'

subprocess.call(shlex.split(kill_worker_cmd))

start_worker_cmd = 'celery -A my_celery_project worker -l info'

subprocess.call(shlex.split(start_worker_cmd))

class Command(BaseCommand):

def handle(self, *args, **options):

self.stdout.write('Starting celery worker with autoreload...')

# You should use the following line for older version(<2.2)

# autoreload.main(restart_celery, args=None, kwargs=None)

autoreload.run_with_reloader(restart_celery, args=None, kwargs=None)

As you can see we make use of the same auto-reload functionality which Django developed to restart its local development server. You can use this command in the docker-compose file as follows.

worker:

build: .

restart: always

env_file:

- .env

command: python3 manage.py celery

volumes:

- .:/code

links:

- rabbitmq

- postgres

It will auto-restart itself whenever you make the code changes.